Originally developed for Apple, HTTP Live Streaming (HLS) is a video-streaming protocol, supported by Android and other mobile platforms. HLS uses adaptive bitrate to adjust video quality to each viewer’s internet speed and device capabilities. Presently, HLS is mandatory for live streaming on certain mobile devices and most HTML5 video players.

In this article you will learn about the following topics:

- What is HTTP live streaming?

- How does HLS work?

- What are the pros and cons of HLS?

- How do other live-streaming protocols compare to HLS?

- How do you deploy basic HLS?

- How does Cloudinary help you set up HLS?

What Is HTTP Live Streaming?

Again, HLS is a protocol that streams videos by means of an adaptive bitrate. Initially designed for Apple devices, HLS now works on other devices, including Android phones, Smart TVs, game consoles, and more.

You can deliver HLS videos through standard web servers or content delivery networks (CDNs). During that process, HLS automatically adapts the video quality to match the viewer’s Internet speed, smoothly delivering videos of any quality, from 8K (UHD) to 144-pixel ones.

How Does HLS Work?

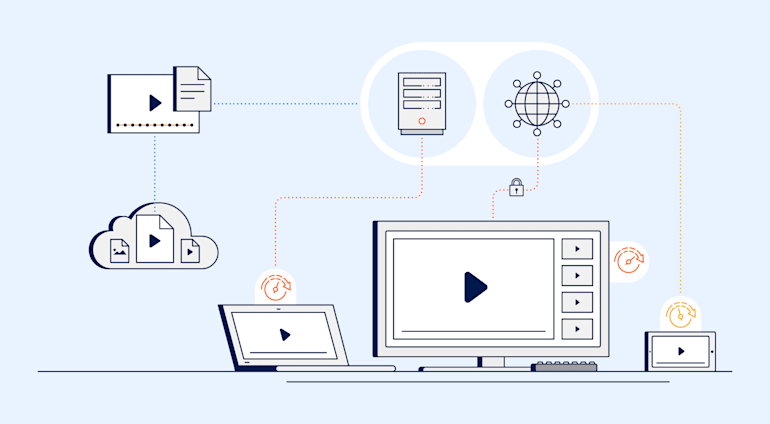

HLS streams videos by leveraging three components: video data, distribution channels, and client devices.

Video Data

HLS can stream videos from two primary sources:

- Content servers for on-demand streaming

- Real time video sources for live streaming

Before streaming can proceed, two processes must first take place, typically on a server before data distribution starts:

- Encoding, whereby video data is formatted according to either the H.264 or H.265 video-compression standard, which enables devices to recognize and decode data correctly. This process also creates video copies with different quality levels.

- Segmentation, whereby video data is divided into short segments of a standard length of six seconds. Afterwards, index files are created to specify the order and timing in which to play the segments.

Distribution Channels

Once encoded and segmented, videos can be streamed to viewers in direct response to requests made to a content server. Alternatively, streaming can occur through a CDN, with which you can more easily distribute streams across a geographic area and cache data for faster delivery to client devices.

Client Devices

Client devices receive and display video data on smartphones, laptops, desktops, smart TVs, and other connected devices. On receiving a video file, a client device determines the order in which to play the segments according to the index file. Additionally, based on the connection speed, local system resources and screen dimensions, client devices figure out which stream quality to adopt.

For details on streaming, see this post: The Future of Audio and Video on the Web.

Client Support and Latency

HLS is universally supported and is a common way to stream video to mobile devices, tablets, or HTML5 video players.

Traditionally , HLS latency was higher than that of other streaming options with up to a 30-second delay. In late 2019, Apple introduced an HLS low latency mode that provides sub-two-second latency for live streaming. Originally, low latency HLS required changes in the way publishers authored and served video streams, and special support by clients and CDNs. However, as of May 2020, low latency is an integral part of the HLS protocol.

What Are the Pros and Cons of HLS?

HLS offers the following benefits:

- HLS’s adaptive-bitrate capabilities ensure that broadcasters deliver the optimal user experience and minimize buffering events by adapting the video quality to the viewer's device and connection.

- Players can automatically adapt and adjust for changes in network speed, preventing stalls when the local connection is unstable.

- HLS is natively supported on Microsoft Edge 12-18, Safari 6+, iOS Safari 3.2+, Android Browser 3+, Opera Mobile 46+, and Chrome for Android 81+.

- It can be deployed on almost all other client devices via an HLS-compatible video player

Note the flip side of HLS:

- Live-streaming with HLS is typically delayed by 20 to 60 seconds.

- HLS has less impact on short-form videos. If you're planning to deliver 10 seconds clips, better use progressive download—a technique that lets you deliver a small part of the video file, and download the rest while the video is playing.

- See Automatic Video Transcoding and Content-Aware Video Compression for more details.

For buffering tips, check out this post: How to Implement Smooth Video Buffering for a Better Viewing Experience.

How Do Other Live-Streaming Protocols Compare to HLS?

To better understand HLS, have a look at how it stacks against the other live-streaming protocols. Below is a brief comparison of HLS and three of them.

HLS Versus RTMP

Real-Time Messaging Protocol (RTMP), also known as Flash, was developed in the mid-2000s by Macromedia for streaming audio and video. Currently, RTMP is under the auspice of Adobe as a semiopen standard.

Previously the default streaming protocol for all delivery networks, RTMP still remains the standard for many broadcasters, because it is the de-facto protocol for inputting video streams from a camera or encoder. However, because Adobe Flash was commonly used to play RTMP on browsers, and modern browsers retired support for Flash, RTMP is losing relevance.

Many broadcasters use online video platforms (OVPs) or hosting services, which transform video streams to HLS, causing many CDNs to retire support for RTMP.

HLS Versus MSS

Introduced by Microsoft in 2008, Microsoft Smooth Streaming (MSS) also leverages adaptive bitrates for live streaming. Since it is proprietary to Microsoft devices, adoption of MSS is limited. The platform on which it is most widely used is the Xbox One game console.

HLS Versus MPEG-DASH

Also bitrate adaptive, Moving Picture Experts Group-Dynamic Adaptive Streaming Over HTTP (MPEG-DASH) is the newest of the alternative protocols and the first HTTP-based international-streaming protocol. Thanks to this protocol’s codec-agnostic approach, you can play video with it almost universally, hence its standard acceptance. MPEG-DASH supports a range of formats, including H.264, H.265, VP8/9 and AV1.

For a tutorial on optimizing MPEG-DASH, see this post: Video Optimization, Part II: Multi-Codec Adaptive Bitrate Streaming.

How Do You Deploy Basic HLS?

This section describes how to deploy HLS. Before you start, ensure that the following requirements are in place:

- A receiver in the form of an HTML page for streaming to a browser, or a client app for streaming to a mobile device or tablet.

- A host in the form of a web server or CDN.

- A utility for encoding your video source. That utility must be able to encode video in fragmented MPEG-4 or MPEG-2 TS files with H.264 or H.265 data, and audio files as augmentative and alternative communication (AAC) or Dolby AC-3.

For more information, check out Doug Sillars article on "How HLS Adaptive Bitrate Works"

Step 1: Create an HTML Page and embed video.js.

An easy way to get started with HLS is to embed a video player like video.js. Video.js is a lightweight player that is responsive and integrates with platforms like YouTube and Vimeo.

Add the code below to embed video.js on your page.

<head>

<link href="https://vjs.zencdn.net/7.8.2/video-js.css" rel="stylesheet" />

<script src="https://vjs.zencdn.net/ie8/1.1.2/videojs-ie8.min.js"></script>

</head>

<body>

<video

id="my-video"

class="video-js"

controls

preload="auto"

width="640"

height="264"

poster="MY_VIDEO_POSTER.jpg"

data-setup="{}"

>

<source src="MY_VIDEO.mp4" type="video/mp4" />

<source src="MY_VIDEO.webm" type="video/webm" />

<p class="vjs-no-js">

To view this video please enable JavaScript, and consider upgrading to a

web browser that

<a href="https://videojs.com/html5-video-support/" target="_blank"

>supports HTML5 video</a

>

</p>

</video>

<script src="https://vjs.zencdn.net/7.8.2/video.js"></script>

</body>Step 2: Configure a Web Server.

Configure a web server from which to serve the video stream. As mentioned previously, any normal web server can serve this purpose. During configuration, associate your file extensions with the correct MIME type to identify data.

The following table shows which file extension is associated with which MIME type.

| File Extension | MIME Type |

|---|---|

| .m3u8 | vnd.apple.mpegURL |

| .ts | video/MP2T |

| .mp4/.m4s | video/mp4 |

A few tips:

- To ensure compatibility, if your web server has MIME-type constraints, associate

.m3ufiles withaudio/mpegURL. Be sure to optimize your files by compressing them. Since index files are often large in size and frequently redownloaded, compression makes a big difference. A good practice is to compress them before upload. Alternatively, set up real-time

gzipcompression on your server.Another issue with index files is caching. When streaming live broadcasts, index files are often overwritten. To ensure that you deliver the latest version, re-serve the index file with each request by shortening the time to live (TTL) of your files to properly cache the recent files. For video on demand, the index file stays the same so you can skip this step.

To learn about the progressive web, see this post: Media-Heavy Apps? Progressive Web to the Rescue.

Step 3: Validate Your Streams.

Before streaming media to viewers, validate your streams to ensure that the files play correctly and smoothly. One way of doing that is with the Media Stream Validator, which is part of Apple's HTTP Live Streaming Toolkit.

Media Stream Validator is a CLI utility for verifying local and HTTP URL files. It produces a diagnostic report on any errors or issues found. After adding files to your stream, always run this utility or a similar one as a safeguard.

Step 4: Serve Key Files Securely Over HTTPS.

Depending on your content, you might wish to set up streaming over secure protocols. You can do that with the Apple FairPlay Streaming software development kit (SDK) by directly serving files over HTTPS. Alternatively, serve media files over HTTPS, in which case you must encrypt them. You can do that with Apple’s file and stream segmenter tools by setting encryption options, including periodic encryption-key changes to put a lifecycle on your media, limiting access to a certain period of time.

Afterwards, decrypt your media files with an initialization vector. As with the keys, you can change initialization vectors periodically. For optimal protection, we recommend that you change your keys every three to four hours, and initialization vectors every 50 MB of the data that is streamed.

To protect your keys from being stolen and avoid your encryption efforts going to waste, transfer the keys over HTTPS or another secure method. This can be a tricky task, however, so test the keys internally over HTTP first. That way, if issues surface, you can resolve them before adding HTTPS.

Following are the preliminary requirements for setting up HTTPS key serving:

SSL Certificate

Verify that a signed SSL certificate is on your HTTPS server. If not, obtain and install a valid certificate from a paid or free provider.

Depending on the certificate service you use, either run a certificate client, such as Certbot, or install the certificate directly. For the latter option, be sure to also install your intermediate or leaf certificate so that users can verify the root certificate.

Authentication Domain

Verify that the authentication domain for your key files matches the domain for your first playlist file. Such a setup enables you to serve your playlist file from your HTTPS server and your other files from HTTP. Since the files cannot be played correctly without the playlist, your videos are secure to an extent.

Next, set up a way for viewers to authenticate themselves. You can do that by building a dialog or a system for storing credentials on the client device. HLS does not offer dialogs so if you pick that option, build a dialog yourself.

For details on HTTP security, see this post: Why Isn't Everyone Using HTTPS and How to Do It With Cloudinary.

How Does Cloudinary Help You Set Up HLS?

With HLS, you create many video copies or video representations, each of which must meet the requirements of various device resolutions, data rates, and video quality. Additionally, you must do the following:

- Add index and streaming files for the representations.

- Create a master file that references the representations and that provides information on and metadata for the various video versions.

That’s a load of grunt work for even one video. Turn to Cloudinary, which automatically generates and delivers all those files from the original video, transcoded to either HLS or MPEG-DASH, or both of those protocols. Called “streaming profiles,” that feature enables you to configure adaptive streaming processes. You can customize the default profile settings to satisfy your requirements. Once those settings are in place, Cloudinary automatically handles all the drudgery for you.

For details on streaming profiles, see the following:

- The article HTTP Live Streaming Without the Hard Work

- The related Cloudinary documentation: HLS and MPEG-DASH adaptive streaming

Other resources are our interactive demos, our FAQ page, and the Cloudinary community forum.

Above all, try out this superb feature yourself. Start with creating a free Cloudinary account.