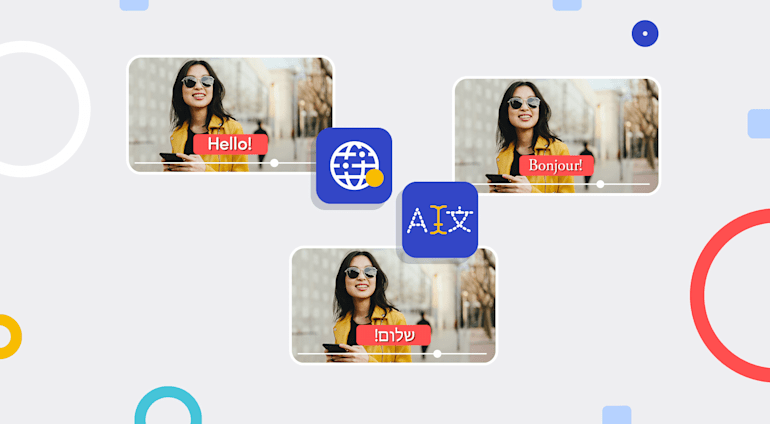

No matter your business focus—public service, B2B integration, recruitment—multimedia, in particular video, is remarkably effective in communicating with the audience. Before, making video accessible to diverse viewers involved tasks galore, such as eliciting the service of production studios to manually dub, transcribe, and add subtitles. Those operations were costly and slow, especially for globally destined content.

It’s now a different story with cloud-based tools and services, which enable international outreach for enterprises and small businesses alike. Noteworthy are machine-learning tools that integrate the transcription process into the production cycle with a quick turnaround.

This article describes several capabilities of the Cloudinary add-on for Google’s AI Video Transcription and the Google Cloud Speech API, as follows:

- Automate translation into multiple languages.

- Generate subtitles and transcripts.

- Adjust logos and apply overlays and special effects for specific regions.

Adding Subtitles and Transcripts

Because, with subtitles, viewers can comprehend and enjoy visual content without knowing the video’s language of recording, subtitles are one of the easiest ways to make videos accessible heterogeneously. On the other hand, transcripts are extremely helpful for those who are hard of hearing or who have limited vision, for those folks can make out the text with a screen reader.

Even though Google Cloud Speech API works without Cloudinary’s add-on, it requires a much more complex configuration. Cloudinary simplifies the process by automatically generating subtitles and transcripts from the video in your account’s Media Library, after which you can display them in the video player. Furthermore, you can translate in real time the text to many supported languages with Cloudinary’s Google Translation add-ons, which can also translate your video’s metadata—title, description, tags—to facilitate search.

Those translation add-ons, each slated for a specific use, generate transcripts along with the necessary data for rendering text on screen. This example, which transcribes a President Lincoln speech, demonstrates the add-ons in action: video tagging, image moderation and enhancement, etc.

Translating Embedded Content

Even with translated audio, videos could contain language-driven information in their frames, such as road signs, whiteboards, restaurant menus, and slide presentations. Depending on how viewers interact with your media, you might need to translate that embedded content. As an integral part of video, embedded content is just as important as the primary content.

With AI-based optical character recognition (OCR) tools like Cloudinary’s OCR Text Detection and Extraction add-on, you can perform two tasks:

- Extract frames from videos and look for translation-required content.

Translate the content to another language or show it as a tag because most screen readers require text with ARIA tags. Plus, the extracted text can provide more context on the video.

For example, if your application offers a virtual environment, e.g., a restaurant, you can extract a dinner menu from the video frames and link it to the ingredients or the restaurant location. For a slide presentation, consider extracting important labels and translating them for global consumption. Whereas subtitles deliver the base-level text, translation of the embedded content yields a sense of inclusion in the entire production.

OCR tools, e.g., Google's Video Intelligence API, can even update the applicable frames in a video to display the words and other data, including metadata, in the required language.

In addition, through AI-powered models, you can convert translated text to human speech in the same pitch as that of the original speaker of a different language. Called AI dubbing or synthetic-film dubbing, this approach helps maintain quality while reaching a wider audience. Since you can acquire more computing power to translate multiple videos in parallel, AI dubbing not only saves time and cost, but also achieves more scalability.

Applying Overlays and Special Effects

Production studios often put overlays, such as text descriptions or annotations of the content in the frame, a watermark logo, or text-based special effects on top of the final cut of a video. Besides translating the content within the video frames with the techniques described above, you can also add localized overlays and watermark logos to media for each geographical region with the overlay options offered by Cloudinary’s SDK.

For example, to add a text overlay to the media content programmatically, first translate the content to the local language with Cloudinary’s add-ons, and then add the text to the video according to the production’s style and theme requirements. Such an approach saves you production-cut effort and eliminates postproduction processing.

Organizations with different brand images or names in various regions usually have different logos for the foreign markets. Through the same Cloudinary SDK, you can add logos and watermarks to media, generating localized content with the correct overlays and text.

Summing It Up

To reach an extensive, varied audience and engage them with the same video content, you must translate and internationalize the associated text. Transcripts, subtitles, translations, and regional overlays greatly enhance user experience while ensuring regional compliance. Thanks to automation, the process involved, no longer tedious and pricey as before, speeds up your production cycle. Plus, machine learning-powered models deliver expert-level results in translation, transcription, and localization at scale.

In partnership with Google, Cloudinary offers numerous capabilities that create a more accessible and scalable content library, helping you reach the broadest audience possible worldwide. For details, check out our documentation.