Images and videos are critical for ensuring user engagement on the web. For instance, on a retail website, images of a product from different angles or a 360-degree video of the product can lead to higher conversion rates. For a news website, users are more likely to read articles with visual media accompanying the content. It has been reported that posts that include images produce a 650-percent higher user-engagement rate than text-only posts.

Communicating intent to users through contextual images and videos is important. Most websites focus on identifying the context for users who can then visually and auditorily interpret the media. But what about handicapped users? It is equally important to communicate context to those whose disabilities prevent them from interpreting media on websites and mobile apps. The American Disabilities Act (ADA) makes it illegal for any government agency or business in the United States to offer to the public goods and services that are inaccessible to people with disabilities. Entities that offer services and products on the web must comply with the technical requirements in the Web Content Accessibility Guidelines (WCAG) 2.0 Level AA for digital accessibility.

The All-Important Alt Text for Images

One of the most common pitfalls in enabling accessible content is the lack of alt text in the HTML attribute in the source. Text embedded under

alt specifies alternative verbiage that is rendered in place of the related image if it cannot be rendered. More importantly, screen-reader software uses alt text to enable someone who’s listening to the content of a web page, such as a blind person, to understand and interact with the content. According to a survey conducted by WebAIM.org, 89.2 percent of the respondents use a screen reader due to a disability.

This post demonstrates how you can leverage Cloudinary add-ons and third-party APIs to automate the process of generating alt text for images as part of your media-management pipeline. Through computer vision and machine-learning algorithms, those add-ons and APIs automatically generate keywords and phrases for alt text to scale—a tremendous time saver if you have thousands of assets.

Here are the Cloudinary add-ons for media recognition and categorization that can automate the generation of alt text:

- Amazon Rekognition Auto Tagging

- Amazon Rekognition Celebrity Detection

- Google Auto Tagging

- Google Automation Video Tagging

- Imagga Auto Tagging

Alternatively, several third-party services offer APIs for media recognition and categorization. Some examples are CloudSight, Clarifai.ai, and IBM Watson. Also, by using webhooks on Cloudinary, you can build, train, and deploy your own machine-learning models on most cloud providers that can be integrated into the pipeline.

Example

The example below shows you how to automatically generate alt text with Cloudinary’s Amazon Rekognition Auto Tagging add-on and CloudSight.

First, subscribe to the add-on and, if applicable, the service of your choice. Afterwards, tagging and maintaining context to generate alt text for images is extremely simple as part of the upload API call to Cloudinary, as shown below:

Ruby:

Cloudinary::Uploader.upload("ice_skating.jpg",

:categorization => "aws_rek_tagging", :auto_tagging => 0.95, :notification_url => "https://mysite/my_notification_endpoint")PHP:

\Cloudinary\Uploader::upload("ice_skating.jpg",

array("categorization" => "aws_rek_tagging", "auto_tagging" => 0.95, "auto_tagging" => “https://mysite/my_notification_endpoint“));Python:

cloudinary.uploader.upload("ice_skating.jpg",

categorization = "aws_rek_tagging", auto_tagging = 0.95,

notification_url = "https://mysite/my_notification_endpoint")Node.js:

cloudinary.uploader.upload("ice_skating.jpg",

function(result) { console.log(result); },

{ categorization: "aws_rek_tagging", auto_tagging: 0.95,categorization: "https://mysite/my_notification_endpoint"});Java:

cloudinary.uploader().upload("ice_skating.jpg", ObjectUtils.asMap(

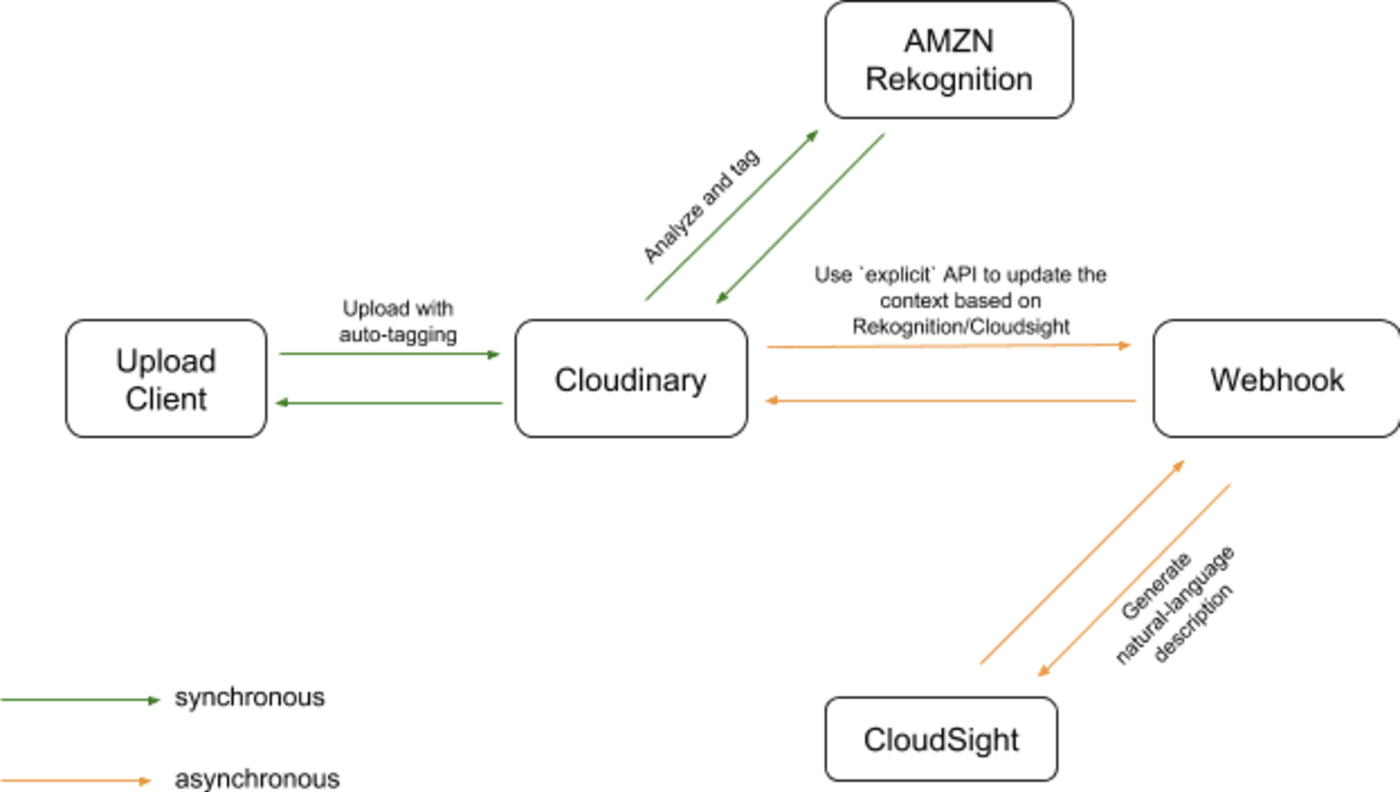

"categorization", "aws_rek_tagging", "auto_tagging", "0.95", "notification_url", "https://mysite/my_notification_endpoint"));The diagram below illustrates a sample workflow for Amazon Rekognition and CloudSight. The green arrows depict the processes that occur synchronously with respect to the upload API call; the orange arrows, those that occur asynchronously.

The process proceeds as follows, step by step:

- You upload images to Cloudinary with the

uploadAPI. - Cloudinary invokes the webhook specified in the

notification_urlparameter. - Webhook invokes the CloudSight API to request a natural-language description of the uploaded images. Refer to the CloudSight API documentation for details.

- After receiving the description, the webhook updates the context of the images with the

explicitAPI according to the tags from Amazon Rekognition and CloudSight’s natural-language description. - When publishing the images to your website, you obtain their context through the Admin API.

See the interactive demo below for a few examples of images with pre-generated alt text. You can upload an image of your own and have Amazon Rekognition and CloudSight generate its alt text. Start by clicking Upload under Image. The code-level implementation of the demo is different from the workflow described above.

See the Pen yxBGqV by Harish Jakkal (@harishcloudinary) on CodePen.

Conclusion

Generating meaningful alt text for images to meet WCAG’s legal requirements for digital accessibility can be a daunting task. Not so with Cloudinary, as shown in this post. You can now take a step in the right direction by automating the production of alt text with Cloudinary add-ons or a third-party service of your choice.

That way, you can make media more accessible, simultaneously stimulating and enriching everyone’s browsing experience. What a worthwhile undertaking!